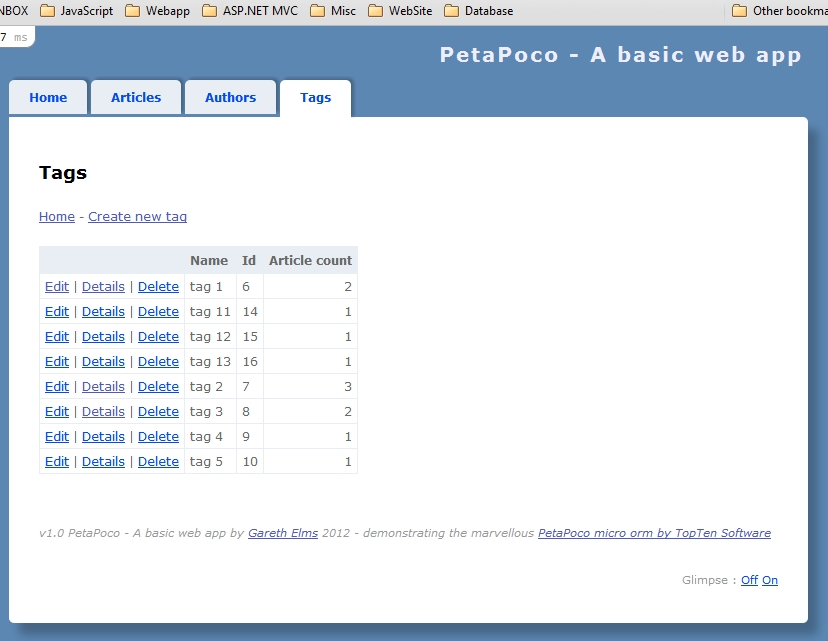

Many-to-many relationships with PetaPoco

An example of a many-to-many relationship in a database are blog post tags. A blog post can have many tags and a tag can have many blog posts. So how do you do this in PetaPoco? I’ve added tag support to my PetaPoco A Simple Web App project on GitHub so I’ll explain what I did.

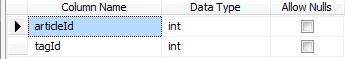

To start with you need to create a link table in your database which will stitch the article and tag tables together. So I created the articleTag table to contain lists of paired article ids and tag ids. This is all the info we need to persist this relationship in the database.

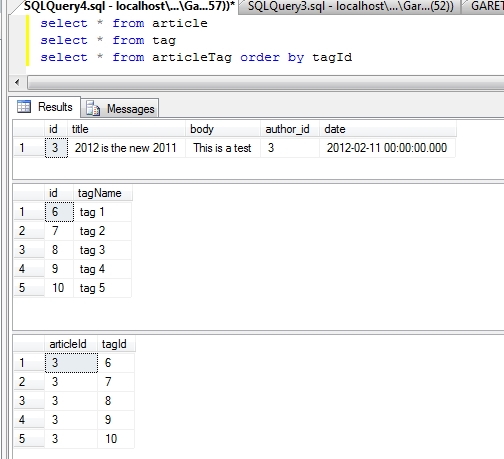

So if we have an article with 5 tags you’ll end up with 5 records in articleTag like this :

Creating these records is a one-liner if you have the article and tag ids :

_database.Execute(

"insert into articleTag values(@0, @1)",

article.Id, tagId);

There is a bit more complexity when it comes to reading article pocos and their tags. There are two ways of doing it :

The naughty+1 way

You could do this :

- Load the articles without the tags.

- Loop through each article and retrieve its tags

- Assign the list of tags to each article in the loop.

This is the N+1 problem and it doesn’t scale well. The more articles you’re loading the more database round trips you’ll make and the slower your app will run. Nobody should recommend this approach, but let’s see it anyway :

public Article RetrieveById( int articleId)

{

var article = _database.Query(

"select * from article " +

"join author on author.id = article.author_id " +

"where article.id=@0 " +

"order by article.date desc", articleId)

.Single

<article>();

var tags = _database.Fetch(

"select * from tag " +

"join articleTag on articleTag.tagId = tag.id " +

"and articleTag.articleId=@0", articleId);

if( tags != null) article.Tags = tags;

return article;

}

This example is just for one article. Imagine it if we were pulling back 50 articles. That would be 51 database round trips when all we really need is 1.

The right way

public Article RetrieveById( int articleId)

{

return _database.Fetch(

new ArticleRelator().Map,

"select * from article " +

"join author on author.id = article.author_id " +

"left outer join articleTag on " +

"articleTag.articleId = article.id " +

"left outer join tag on tag.id=articleTag.tagId " +

"where article.id=@0 ", articleId).Single();

}

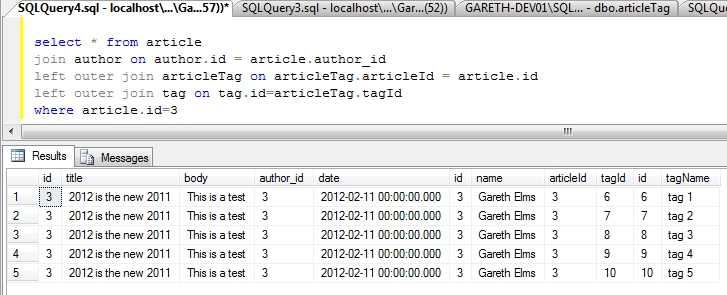

Here I am pulling back a dataset that includes the article record, the author record and the tag records. You can tell this is a many-to-many relationship by the double joining first on the articleTag then on tag itself. The results that come back to PetaPoco look like this :

As you can see there is a fair bit of duplication here and this is a trade off you will want to think carefully about. The trade off is between the number of database round trips (number of queries) and result set efficiency (network traffic from the sql server to the web server (or service layer server)). It is best to have as few database round trips as possible. But on the other hand it is better to have lean result sets too. I’m sticking with the right way.

If I was writing a real blogging app I would think long and hard about a single joined query like this because the body content, which could be thousands of bytes, would be returned as many times as there are tags against the blog post. I would almost certainly use a stored procedure to return multiple result sets so there is only one database round trip. However typical non-blogging datasets won’t contain such unlimited text data eg; orders and order lines. So there’s no problem.

Document databases suit this type of arrangement. The tags would be embedded in the article document and would still be indexable.

Stitching the pocos together

See that new ArticleRelator().Map line above? PetaPoco can utilise a relator helper function for multi-poco queries so that each poco is correctly assigned in the data hierarchy. Having the function wrapped in a class instance means it can remember the previous poco in the results.

If you’re using multi-poco queries I urge you to read the PetaPoco documentation on the subject and experiment for an hour or two. All the relator class does is take the pocos coming in from each row in the resultset and stitch them together. It allows me to add each tag to the article as well as assign the author to the article.

Turn around sir

And what about from the other angle? Where we have a tag and we want to know the articles using the tag? This is the essence of many-to-many, there are two contexts.

public Tag RetrieveByTag( string tagName)

{

return _database.Fetch(

new TagRelator().Map,

"select * from tag " +

"left outer join articleTag on articleTag.tagId = tag.id " +

"left outer join article on " +

"article.id = articleTag.articleId " +

"where tag.tagName=@0 order by tag.tagName asc", tagName)

.SingleOrDefault();

}

This is an identical process as with retrieving articles but the tables are reversed. One tag has many articles in this context.

More responsibilities

Having many-to-many relationships does add more responsibilities to your app. For example when deleting an author it’s no longer sufficient to just delete the author’s articles followed by the author record. I have to remove the author’s articles’ tags from the articleTag table too otherwise they become data orphans pointing to articles that no longer exist. Add because we’re performing multiple database calls that are all required to succeed (or not at all), we need a transaction. Like this :

public bool Delete( int authorId)

{

using( var scope = _database.GetTransaction())

{

_database.Execute(

"delete from articleTag where articleTag.articleId in " +

"(select id from article where article.author_id=@0)",

authorId);

_database.Execute( "delete from article where author_id=@0", authorId);

_database.Execute( "delete from author where id=@0", authorId);

scope.Complete();

}

return true;

}

Adding many-to-many support to PetaPoco – A Simple Web App was fairly painless and should fill a small hole in the internet. I’ve had a few people ask about it and it seemed the natural next step for the project.

Am I doing many-to-many wrongly? Would you do it differently? Let me know so I can learn from you.

Javascript Sandbox for ASP.NET MVC 3

I’ve started reading more about Javascript this year. My home project is coming up to the point where I’m making some important client side decisions and I want to make sure I’m making the best choices.

As anyone who has looked beyond the surface will tell you, Javascript is deceptively complex and capable. I have four books helping me out at the moment :

- Javascript Web Applications by Alex MacCaw

- Javascript Enlightenment by Cody Lindley

- Javascript : The Good Parts by Douglas Crockford

- Javascript Patterns by Stoyan Stefanov

I recommend all four. I started reading Javascript Web Applications by Alex MacCaw because I wanted to understand how Javascript frameworks like KnockoutJS, BackboneJS and JavascriptMVC are constructed rather than just use them and this book is exactly for that purpose. For me it quickly proved to be a bit too “in at the deep end” so I’ve switched to Javascript Enlightenment by Cody Lindley to get a solid and deep understanding of Javascript.

All these books have code examples and I learn best by following, experimenting and pushing the samples with questions that pop up. So how does an ASP.NET MVC developer play around with Javacsript?

- You could have a folder full of HTML files and chuck your javascript in there, and run them individually

- You could use JS Fiddle

- You could spend three hours creating a modest little sandbox framework built as an ASP.NET MVC app

I did the last one. At one point I abandoned it and switched to JS Fiddle, but then I went back to it. I prefer messing about in my own environment sometimes.

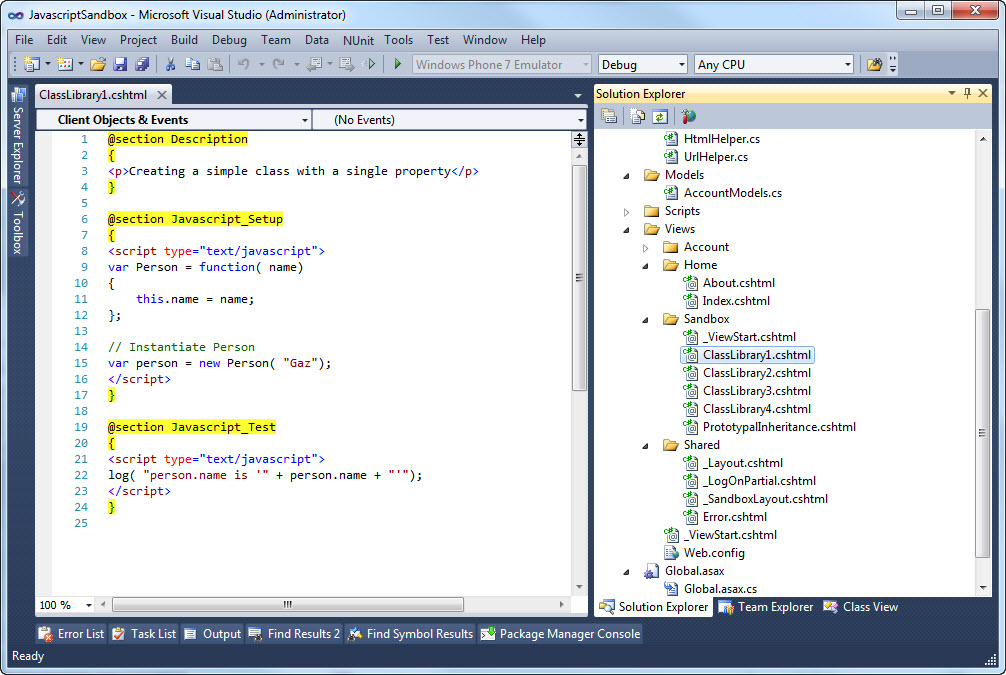

I’ll call your individual Javascript fiddles a sandcastle because I’m looking left and right and can’t see anyone telling me not to (although I am alone in my upstairs office). A Javascript sandcastle is created as a view like this :

- Description : Optional description of what your javascript is testing

- Javascript_Setup : Stick your setup code in here

- Javascript_Test : The code that runs against the Javascript_Setup code

Then edit the Home/Index view to add a link to your new test. As you can see from the screenshot in the link I’ve added the html helper @Html.SandboxLink(sandboxViewName,description) to simplify generating links to your Javascript tests. I want to automate this at some point in the future so you only need to create the views.

The advantage you will notice at this point is that you get Intellisense in your sandcastle because it’s all in an ASP.NET MVC view in Visual Studio. The other advantage comes from how the _SandboxLayout.cshtml page presents the results. Here’s what you see when you’ve run a test :

Your sandcastle is presented nicely and formatted using the terrific Syntax Highlighter by Alex Gorbatchev. There is a generous 500ms pause while I wait for the formatting to complete then I run your test code. In your JavaScript you can call the helper log() function which outputs to the Log area at the bottom thus completing your test and showing you what happened. Logging helps to see the flow of the code which isn’t always obvious with Javascript, look at a slightly more complex example to see what I mean.

When I’ve grokked the good parts of Javscript the next step on my client side journey is Javascript unit testing. When I mention “test” in this blog post I’m not talking about unit testing an application’s code, I’m talking about isolated tests that’ll help you figure out some code – a tiny bit like how we use LinqPad (but that’s in a different league of course).

You can download this project from my Javascript Sandbox For ASP.NET MVC 3 Github page (I’m really enjoying using it to learn Javascript) or you could use JSFiddle and wonder with bemusement why I spent 3 hours putting this project together, 2 hours writing this blog post and 15 minutes messing about with Git when I could have finished the book by now and be back working on my home project.

My home project is such a long way off because every little step of the way I’m going down all these rabbit holes and discovering that rabbit droppings taste good.

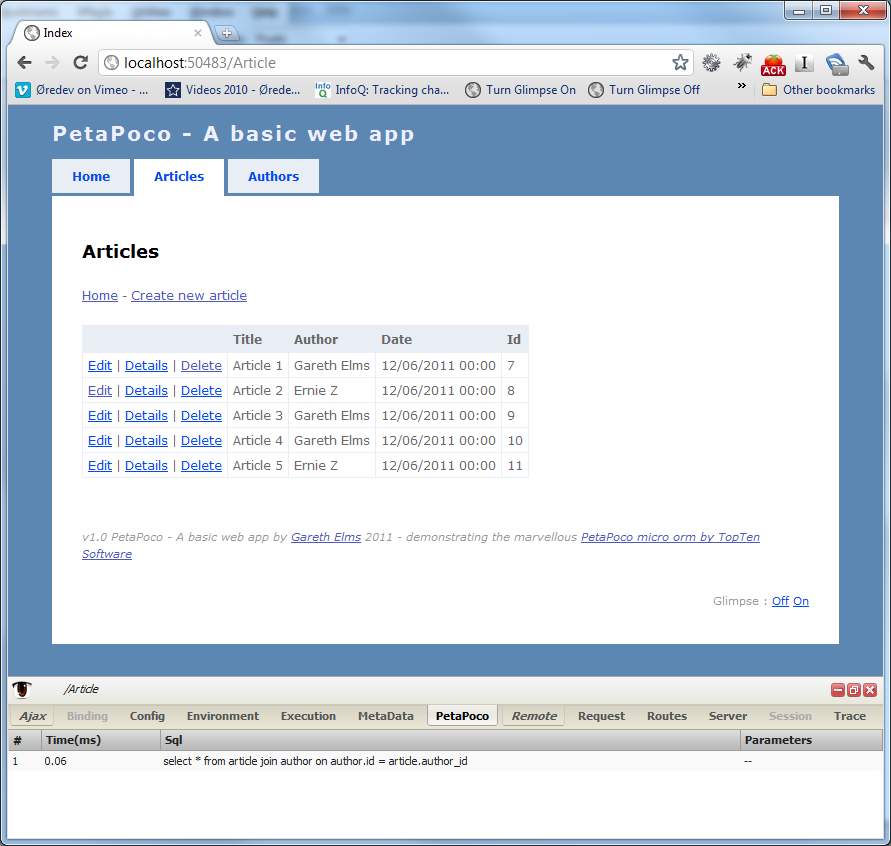

Added MVC Mini Profiler to PetaPoco – A simple web app

I saw a question on Stack Overflow asking how to setup Sam Saffron’s MVC Mini Profiler to work with PetaPoco and realised it would be a good idea to implement the mini profiler into my PetaPoco example app.

The latest release of PetaPoco included a change that made it very easy to integrate the profiler with PetaPoco if you want to. Prior to the latest version you had to hack the PetaPoco.cs file a little bit or implement your own db provider factory. Pleasingly there is now a virtual function called OnConnectionOpened(), which is invoked by PetaPoco when the database connection object is first created. Being a virtual function you can override it in a derived class and return the connection object wrapped in the MVC Mini Profiler’s ProfiledDbConnection class. Like this :

public class DatabaseWithMVCMiniProfiler : PetaPoco.Database

{

public DatabaseWithMVCMiniProfiler(IDbConnection connection) : base(connection) { }

public DatabaseWithMVCMiniProfiler(string connectionStringName) : base(connectionStringName) { }

public DatabaseWithMVCMiniProfiler(string connectionString, string providerName) : base(connectionString, providerName) { }

public DatabaseWithMVCMiniProfiler(string connectionString, DbProviderFactory dbProviderFactory) : base(connectionString, dbProviderFactory) { }

public override IDbConnection OnConnectionOpened(

IDbConnection connection)

{

// wrap the connection with a profiling connection that tracks timings

return MvcMiniProfiler.Data.ProfiledDbConnection.Get( connection as DbConnection, MiniProfiler.Current);

}

}

I didn’t want to lose the existing Glimpse profiling I had in place so I made my class derive from DatabaseWithGlimpseProfiling, which is already in my project’s codebase, so you get both methods of profiling in one app.

public class DatabaseWithMVCMiniProfilerAndGlimpse : DatabaseWithGlimpseProfiling

This is turning into a profile demonstration app with a bit of PetaPoco chucked in :

You can download PetaPoco – A Simple Web App from my GitHub repository.

PetaPoco – A simple web app

I’ve put together a basic web app using PetaPoco to manage a one-to-many relationship between authors and their articles.

The author poco contains just the author’s name, id and a list of articles the author has written :

public class Author

{

public int Id {get; set;}

public string Name {get; set;}

[ResultColumn]

public List<Article> Articles {get; set;}

public Author()

{

Articles = new List<Article>();

}

}

The Author.Articles list is a [ResultColumn] meaning that PetaPoco won’t try and persist it automatically, it’s ignored during inserts and updates. The Article poco has more properties :

public class Article

{

public int? Id {get; set;}

public string Title {get; set;}

public string Body {get; set;}

[DisplayFormat(DataFormatString = "{0:dd/MM/yyyy}", ApplyFormatInEditMode=true)]

public DateTime Date {get; set;}

[ResultColumn]

public Author Author {get; set;}

[Column( "author_id")]

[DisplayName( "Author")]

public int? AuthorId {get; set;}

public Article()

{

Date = DateTime.Now;

}

}

The Date property has a date format attribute so that in the \Article\Edit.cshtml view @Html.EditorFor(model => model.Article.Date) will display the date how I want it. The Author property is another ResultColumn only used when displaying the Article, not when updating it. In the ArticleRepository I use the MulltiPoco functionality in PetaPoco to populate the Author when the Article record is retrieved.

Setup

By default the app uses the tempdb at .\SQLEXPRESS. This is defined in the web.config :

<add name="PetaPocoWebTest" connectionString="Data Source=.\SQLEXPRESS;Initial Catalog=tempdb;Integrated Security=True" providerName="System.Data.SqlClient" />

The tempdb is always present in SQL Server and is recreated when the database is stopped and started. You can change this to suit your environment. The app will auto generate the tables for you if they don’t exist. In theory if you have SQL Express running you don’t need to do anything to get started

A few details

There are two repository classes; AuthorRepository and ArticleRepository. These classes are where the PetaPoco work lives. As well as loading the Author the AuthorRepository class also loads in the list of articles by that author. I use a relationship relator callback class as demonstrated in the recent PetaPoco object relationship mapping blog post. I made a slight tweak to handle objects that have no child objects at all otherwise there was always a new() version of Article in the list. The fix is simple :

if( article.Id != int.MinValue)

{

// Only add this article if the details aren't blank

_currentAuthor.Articles.Add( article);

}

I tried to get this working with an int? Id, but had type conversion problems in PetaPoco. I’ll investigate that later as it would be useful to have int? Id to indicate a blank record

I’ve used the Glimpse PetaPoco plugin by Schotime (Adam Schroder). Because the Glimpse plugin uses HttpContext.Current.Items all the SQL statements are lost when RedirectToAction() is called from a controller. I got around this by copying the PetaPoco Glimpse data into TempData before a redirect, and from TempData back into the Items collection before the controller executes.

This means you still see update/insert/delete SQL. It is definitely a requirement to perform a RedirectToAction() after such database calls so that it isn’t possible to refresh the screen and re-execute the commands (ie; cause havoc). If the user did refresh the screen (or otherwise repeat the last request) they’d just get the redirect to action and not the request that fired the database action.

I expect this to be a common pattern with Glimpse plugins that use response redirects.

UPDATE

Many thanks to Schotime who showed me that the Remote tab in Glimpse shows you the last 5 requests. All the SQL is there in Glimpse if you look for it. I didn’t realise this when I added the TempData solution

:) but at least using TempData the SQL commands prior to a redirect are visible right on the PetaPoco tab without having to drill down to the previous request, which I kinda like as it’s more intuitive and effortless.

Hope this is useful to someone. You can download the source at GitHub. This is my first project using git so if there are any problems please let me know.

Finally, I’ve cut my finger and I’m angry with my cat.

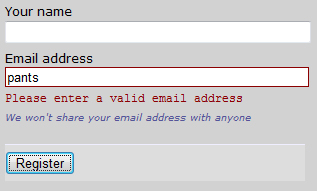

ASP.NET MVC [Remote] validation – what about success messages?

Since ASP.NET MVC 3 it’s easy to validate an individual form field by firing off a HTTP request to a controller action. This action returns either Json(true) if the value is valid or a Json(“error message”) if it is not valid. If you return an error then jquery.validate.js will add your error message to the validation element for the field being validated. It’s easy to setup on your view model :

[Required]

[DataType(DataType.EmailAddress)]

[Display(Name = "Email address")]

[EmailAddress]

[Remote("RemoteValidation_UniqueEmailAddress", "Account")]

public string Email { get; set; }

When the user makes a change to the Email field the /Account/RemoteValidation_UniqueEmailAddress action is automatically called on the server. The server side action is just as simple to setup :

public ActionResult RemoteValidation_UniqueEmailAddress( string Email)

{

object oResult = false;

if( Validation.IsEmailAddress( Email) == false)

{

return Json( "Please enter a valid email address", JsonRequestBehavior.AllowGet);

}

else if( m_oMembershipService.IsThisEmailAlreadyTaken( Email) == true)

{

return Json( "This email address is already in use", JsonRequestBehavior.AllowGet);

}

else

{

return Json( true, JsonRequestBehavior.AllowGet);

}

}

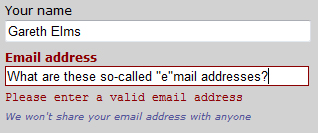

A remote validation error is displayed in exactly the same way as a view would render it. This is because the javascript latches onto the Html.ValidationMessageFor element you setup in your view :

This is ok isn’t it? But…

I want the Email Address label to be bold and red too

In the interests of best practice web form techniques I want the email address label to be bold and red, as well as the error message. To do this I had to hack jquery.validate.js so that it calls a local javascript function with the same name as the server function (with “_response” appended to it) :

remote: function(value, element, param) {

if ( this.optional(element) )

return "dependency-mismatch";

var previous = this.previousValue(element);

if (!this.settings.messages[element.name] )

this.settings.messages[element.name] = {};

previous.originalMessage = this.settings.messages[element.name].remote;

this.settings.messages[element.name].remote = previous.message;

param = typeof param == "string" && {url:param} || param;

if ( previous.old !== value ) {

previous.old = value;

var validator = this;

this.startRequest(element);

var data = {};

data[element.name] = value;

$.ajax($.extend(true, {

url: param,

mode: "abort",

port: "validate" + element.name,

dataType: "json",

data: data,

success: function(response) {

//START OF HACK

var aParts = param.url.split(/\//);//eg; param.url = "/Account/RemoteValidation_UniqueEmailAddress" ELMS

oCustomResultFunction = aParts[aParts.length - 1] + "_response";//ELMS

//END OF HACK

validator.settings.messages[element.name].remote = previous.originalMessage;

var valid = response === true;

if ( valid ) {

var submitted = validator.formSubmitted;

validator.prepareElement(element);

validator.formSubmitted = submitted;

validator.successList.push(element);

//START OF HACK

var bShowErrors = true;

if( typeof(window[oCustomResultFunction]) == "function")

{

bShowErrors = window[oCustomResultFunction]( true, null, validator);

}

//END OF HACK

if( bShowErrors)//HACK

{

validator.showErrors();

}

}

else

{

var errors = {};

var message = (previous.message = response || validator.defaultMessage( element, "remote" ));

errors[element.name] = $.isFunction(message) ? message(value) : message;

//START OF HACK

var bShowErrors = true;

if( typeof(window[oCustomResultFunction]) == "function")

{

bShowErrors = window[oCustomResultFunction]( false, errors, validator);

}

//END OF HACK

if( bShowErrors)//HACK

{

validator.showErrors(errors);

}

}

previous.valid = valid;

validator.stopRequest(element, valid);

}

}, param));

return "pending";

} else if( this.pending[element.name] ) {

return "pending";

}

return previous.valid;

},

And now a client side javacsript function called RemoteValidation_UniqueEmailAddress_response will be called with the result of the remote validation. In this example I know that the function only targets the Email field but it’s wise to check the errors collection that comes back in case other model errors were added in the server function (see the outcommented code below). The aErrors collection is keyed on the client side ID of the invalid field/s :

function RemoteValidation_UniqueEmailAddress_response( bIsValid, aErrors, oValidator)

{

if( bIsValid == false)

{

/* This bit is optional

for( var sId in aErrors)

{

$("label[for=" + sId + "]").addClass( "error").css( "font-weight", "bold");

}*/

$("label[for=Email]").addClass( "error").css( "font-weight", "bold");

}

else

{

$("label[for=Email]").removeClass( "error").css( "font-weight", "normal");

}

return true;

}

The first argument to the function is whether there is an error or not. The second parameter is the errors collection. Now when I enter an invalid email address my form looks like this

That’s better but something is still bothering me

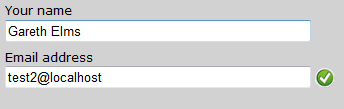

Success messages would be nice

As well as catering for validation errors you now get the chance to provide success feedback as well which can be just as important as error feedback. For example if the user is registering on a site and choosing a username the validation could check that the username is available. If it is then you can present a jolly green tick to the user. This completely removes ambiguiuty, especially if the previous username they entered was already taken and they saw a validation error

One last bit of code :

var g_bEmailHasBeenInErrorState = false;

function RemoteValidation_UniqueEmailAddress_response( bIsValid, aErrors, oValidator)

{

if( bIsValid == false)

{

$("label[for=Email]").addClass( "error").css( "font-weight", "bold");

g_bEmailHasBeenInErrorState = true;

}

else

{

$("label[for=Email]").removeClass( "error").css( "font-weight", "normal");

if( g_bEmailHasBeenInErrorState)

{

g_bEmailHasBeenInErrorState = false;

$("#Email").after( "<img style='float:left;' src='<%=Url.Content( "~/Content/img/tick.gif")%>' alt='This is a valid email for this web site' />");

}

}

return true;

}

Hang on a minute. Why isn’t this functionality in jquery.validate.js anyway? Go to your room!

I didn’t refer once to AJAX because :

- XML is moribund everywhere but the enterprise.

- IE had javascript HTTP requests before the web existed.

- Prior to that you could just screen scrape a hidden iframe for crying out loud!

MongoDB and ASP.NET MVC – A basic web app

I first heard about document databases (NoSql) last year thanks to Rob Conery’s blog post. At the time I couldn’t imagine a database without SQL but there really is such a thing and MongoDB is a leading example. Today I’ve spent some time working with MondoDB putting together a basic ASP.NET MVC web application to CRUD a bugs database.

A document database differs from a relational database in that, when you can get away with it, a record (known as a document here) can contain all it’s own data. It is not necessary to have normalised tables with joins pulling data together into a result set. Instead of having an order table, a product table, a customer table, an address table etc… you can have a single record containing all this information.

Here is an example of a single record for a user :

{

"name": "Mr Card Holder",

"email": "[email protected]",

"bankAccounts":

[

{

"number": "12332424324",

"sortCode": "12-34-56"

},

{

"number": "8484949533",

"sortCode": "11-33-12"

},

],

"address":

{

"address1": "100 The Big Road",

"address2": "Islington",

"address3": "London",

"postCode": "LI95RF"

}

}

And that’s how the record is stored in the database; the exact opposite of a normalised database. The user’s bank accounts are stored in the user document. In this example a user’s bank accounts are unique to that user so it’s ok to store them right there. But what about when the associated information needs to be more centralised such as an author’s list of published books? What happens when there are multiple authors on a book? In this case you would have a separate collection for the books and put the list of book ObjectIds in the author document. Like this :

{

"name": "Mr Author",

"email": "[email protected]",

"publishedBooks":

[

{"id": "12332424324"},

{"id": "5r4654635654"},

{"id": "5654743"}

],

"address":

{

"address1": "142 The Other Road",

"address2": "Kensington",

"address3": "London",

"postCode": "L92RF"

}

}

After loading the author from MongoDB you would then perform a second query to load the associated books.

The data is stored in BSON format (a binary format of JSON). You still get indexes and the equivalent of where clauses, the simplicity is not at the cost of functionality. Pretty much the only thing you don’t get are complex transactions.

I wanted to try this out for myself so I installed MongoDB and saw it working from the command line and then started looking into a C# driver. After a quick look around the internet it seems that there are two main options for C# drivers :

- Sam Corder’s community supported driver, which includes Linq support

- The “official” C# driver from the MongoDB developers (no Linq yet)

I chose the official driver because, although it isn’t as mature as Sam Corder’s driver, I’d already started reading the docs for it.

A day in Visual Studio and a JSON Viewer

First I wrote a very rudimentary membership provider that uses the Mongo database. This lets you register on the site and then login which is enough for my test. Hopefully the MongoDB guys will release a complete ASP.NET membership provider soon.

Secondly I wrote an ASP.NET MVC controller, domain class and MongoDB bug repository to help me figure out how to work with a Mongo database using C#. The BugRepository class contains database code like this :

public Bug Read( string sId)

{

MongoServer oServer = MongoServer.Create();

MongoDatabase oDatabase = oServer.GetDatabase( "TestDB");

MongoCollection aBugs = oDatabase.GetCollection( "bugs");

Bug oResult = aBugs.FindOneById( ObjectId.Parse( sId));

return oResult;

}

public Bug Update( Bug oBug)

{

MongoServer oServer = MongoServer.Create();

MongoDatabase oDatabase = oServer.GetDatabase( "TestDB");

MongoCollection aBugs = oDatabase.GetCollection( "bugs");

aBugs.Save( oBug);

return oBug;

}

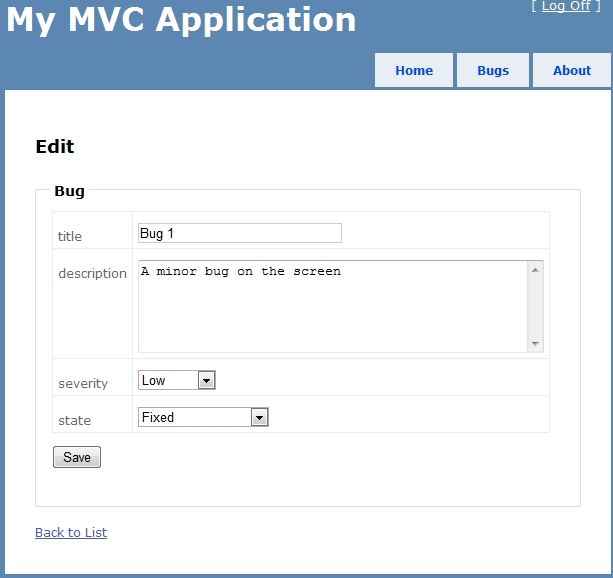

If, like me, you are just getting started using MongoDB with C# you may find it useful to see the code so here is the source code for the test app. You’ll need Visual Studio 2010 and MVC 3. This is what you’ll get :

My main resources while figuring this out were :

- The MongoDB C# driver tutorial

- Craig G Wilson’s article on The Code Project

- Membership Provider example at Itslet.nl

One more thing. Document databases are absolutely perfect for content management systems. One of the challenges for a CMS is handling dynamic schemas and in relational databases this usually involves at least two generic looking tables. To a document database the collection schema is a trivial concept, it just doesn’t care! You can add new properties to a collection whenever you feel like it, even when there is huge amount of data already there. Drupal has already integrated MongoDB I expect more to follow.

My take on .NET CMS’s – Orchard

I threw myself at Orchard for 3 months from August 2010 to November just when version 0.5 had been released. Orchard is based on ASP.NET MVC and at the moment is an open source project financially backed by Microsoft. There are some very clever chaps working on Orchard.

I really tried to use the early 0.5 version of Orchard. The module development infrastructure was baked in so I started creating simple modules by going through the docs. If I had had this blog at the time I would have blogged with lots of detail about how to start working on Orchard modules, as well as a FAQ of all the error messages I’d received and how I fixed them which would have been useful for developers to see

Wow look at this code!

At first I was impressed by the quality of the code and the high level of experience and expertise that has clearly gone into Orchard. They’ve also used lots of open source libraries which introduced me to lots of new concepts and .NET code I would otherwise have never realised was possible. But in the end I just gave up!

Wow. I suck!

In a nutshell it was too hard to get results with the 0.5 version. They’re at version 0.8 now with a 1.0 release coming soon. I gave up at version 0.5 so I can’t say anything about 0.8 or 1.0. My head-against-wall moments could easily have been due to a lack of documentation, which is fair enough as Orchard isn’t even at version 1 yet! The available docs really helped but after I’d read them 20 times I came to the conclusion that I was stuck.

My personal opinion is that in order to produce a flexible CMS in a pre .NET 4 language the code has to be engineered to the point that there is too steep an entry level for a typical enthusiastic module developer. I base this on having worked with Kooboo and Orchard as well as Drupal and WordPress.

I also have the opinion that new CMS’s (not just Orchard) think they can push things off to the community too early.

“We can’t do that module the community must do that. Yes that ungrateful lot over there. Look at them, expecting us to do everything. Idiots”

Do they think they can just follow the “WordPress model” and watch the magic unfold? A community like WordPress’ cannot be prescribed, it is simply down to how easy and flexible it is for a module developer to use combined with good support

I have now officially dropped the idea of “creating websites for people“. It was just something to fill the time and soak up my flailing eagerness while I found a better idea.

The idea of creating websites for people now makes me sick. Now I should stop writing blog posts about technology I am no longer using and focus on my real passions